Talos Linux体验

简介

Talos Linux 是一个为 Kubernetes 环境从头开始设计的安全、最小化且只读的操作系统。它旨在提供一种轻量级、高效且安全的方式来运行 Kubernetes 集群。以下是 Talos Linux 的一些关键特性:

安全性:Talos Linux 采用了多种安全措施,包括只读文件系统和最小化的软件栈,以减少潜在的攻击面。

不可变性:系统一旦部署,就是不可变的。任何更改都需要重新部署一个新的系统镜像,而不是对现有系统进行修改,这有助于减少系统被篡改的风险。

轻量级:Talos Linux 被设计为尽可能轻量级,只包含运行 Kubernetes 所需的最小组件,从而减少了资源消耗。

自动化管理:所有系统管理都是通过 API 完成的,这意味着可以很容易地集成到自动化工作流程中,如使用 GitOps 进行集群管理。

无传统用户界面:Talos Linux 不提供传统的用户界面或命令行界面,所有操作都通过声明式配置和 API 完成。

适用于多种环境:Talos Linux 可以在云环境、虚拟机和裸机上运行,使其适用于各种部署场景。

开源:Talos Linux 是开源的,由 Sidero Labs 支持,拥有活跃的社区,用户可以参与到项目的开发和改进中。

与 Kubernetes 紧密集成:Talos Linux 与 Kubernetes 紧密集成,提供了一种简单的方式来部署和管理 Kubernetes 集群。

Talos Linux 通过这些特性,为用户提供了一个现代化、高效且安全的操作系统,专门用于 Kubernetes 环境。它的目标是简化 Kubernetes 集群的部署和运维,同时提供强大的安全保障。

环境

- talos版本: 1.7.6(http://dl-oss.vqiu.cn/iso/talos-1.7.6-amd64.iso)

- 基础平台: Proxmox

容器镜像中转

由于境内网络原因,体验之前需要处理依赖的镜像,1.7.6 版本所依赖的容器镜像如下:

ghcr.io/siderolabs/flannel:v0.25.3

ghcr.io/siderolabs/install-cni:v1.7.0-2-g7c627a8

registry.k8s.io/coredns/coredns:v1.11.1

gcr.io/etcd-development/etcd:v3.5.13

registry.k8s.io/kube-apiserver:v1.30.3

registry.k8s.io/kube-controller-manager:v1.30.3

registry.k8s.io/kube-scheduler:v1.30.3

registry.k8s.io/kube-proxy:v1.30.3

ghcr.io/siderolabs/kubelet:v1.30.3

ghcr.io/siderolabs/installer:v1.7.6

registry.k8s.io/pause:3.8- 运行docker-registry

# cat >docker-compose.yaml<<EOF

services:

registry:

image: registry.cn-shenzhen.aliyuncs.com/shuhui/registry:2

container_name: registry

restart: always

volumes:

- /data/docker-registry:/var/lib/registry:rw

ports:

- 5000:5000

EOF

# docker-compose up -d 2. 存储镜像(我这里通过阿里云镜像来镜像中转)

hub=registry.cn-shenzhen.aliyuncs.com/xxx

images=(

ghcr.io/siderolabs/flannel:v0.25.3

ghcr.io/siderolabs/install-cni:v1.7.0-2-g7c627a8

registry.k8s.io/coredns/coredns:v1.11.1

gcr.io/etcd-development/etcd:v3.5.13

registry.k8s.io/kube-apiserver:v1.30.3

registry.k8s.io/kube-controller-manager:v1.30.3

registry.k8s.io/kube-scheduler:v1.30.3

registry.k8s.io/kube-proxy:v1.30.3

ghcr.io/siderolabs/kubelet:v1.30.3

ghcr.io/siderolabs/installer:v1.7.6

registry.k8s.io/pause:3.8

)

for img in ${images[@]}

do

docker pull ${hub}/${img##*/}

docker tag ${hub}/${img##*/} localhost:5000/${img#*/}

docker push localhost:5000/${img#*/}

done创建k8s集群

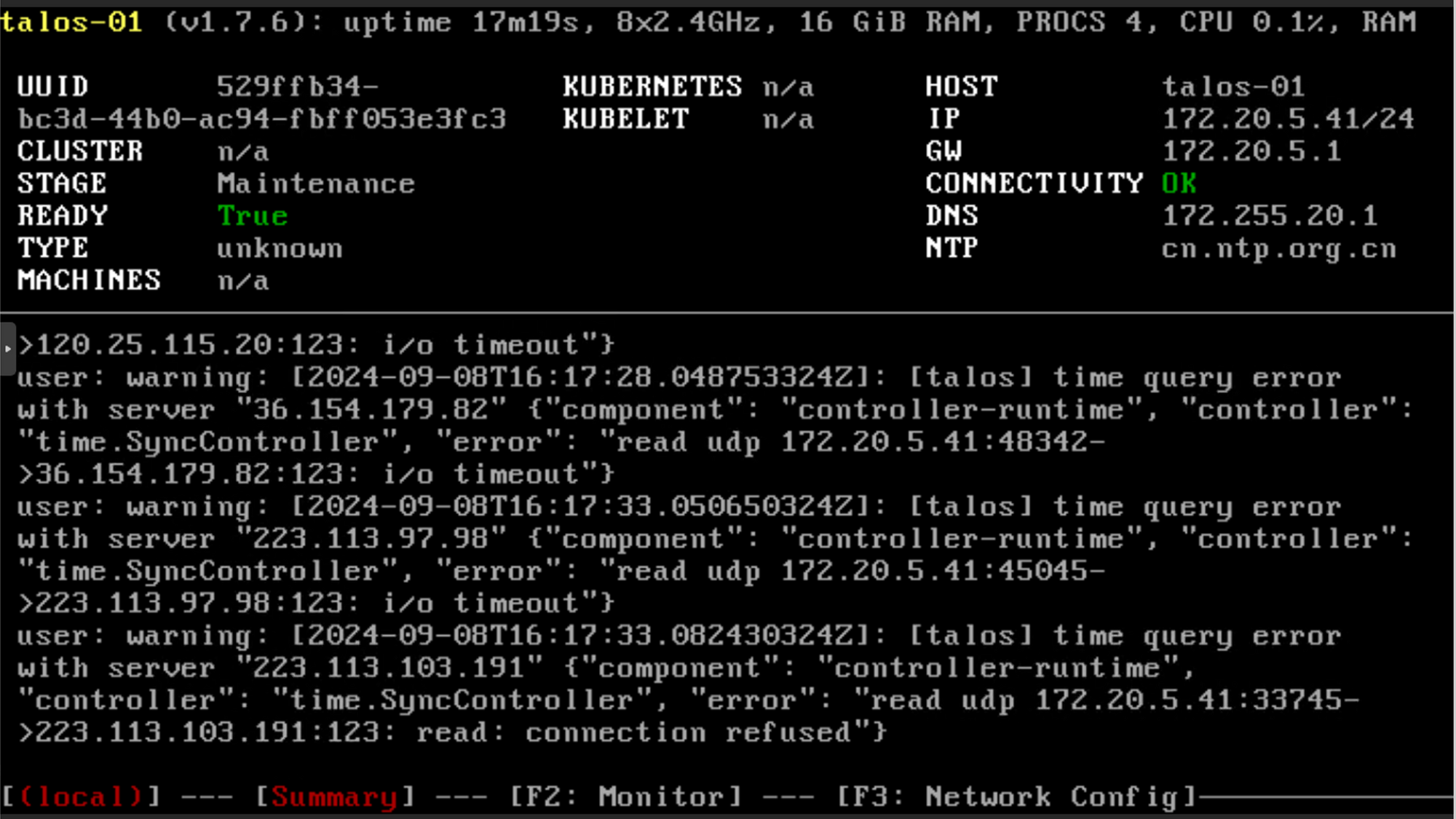

- 使用iso安装talos

把iso加载到虚拟光驱中,配置好IP地址

2. 生成配置文件

下载好talosctl工具,执行如下:

# export CONTROL_PLANE_IP=172.20.5.41

# talosctl gen config talos-proxmox-cluster https://$CONTROL_PLANE_IP:6443 --output-dir . --install-image registry.cn-shenzhen.aliyuncs.com/shuhui/installer:v1.7.6 \

--registry-mirror docker.io=http://172.20.5.5:5000 \

--registry-mirror gcr.io=http://172.20.5.5:5000 \

--registry-mirror ghcr.io=http://172.20.5.5:5000 \

--registry-mirror registry.k8s.io=http://172.20.5.5:5000

注意安装镜像不支持HTTP

3. 创建第1台控制平面

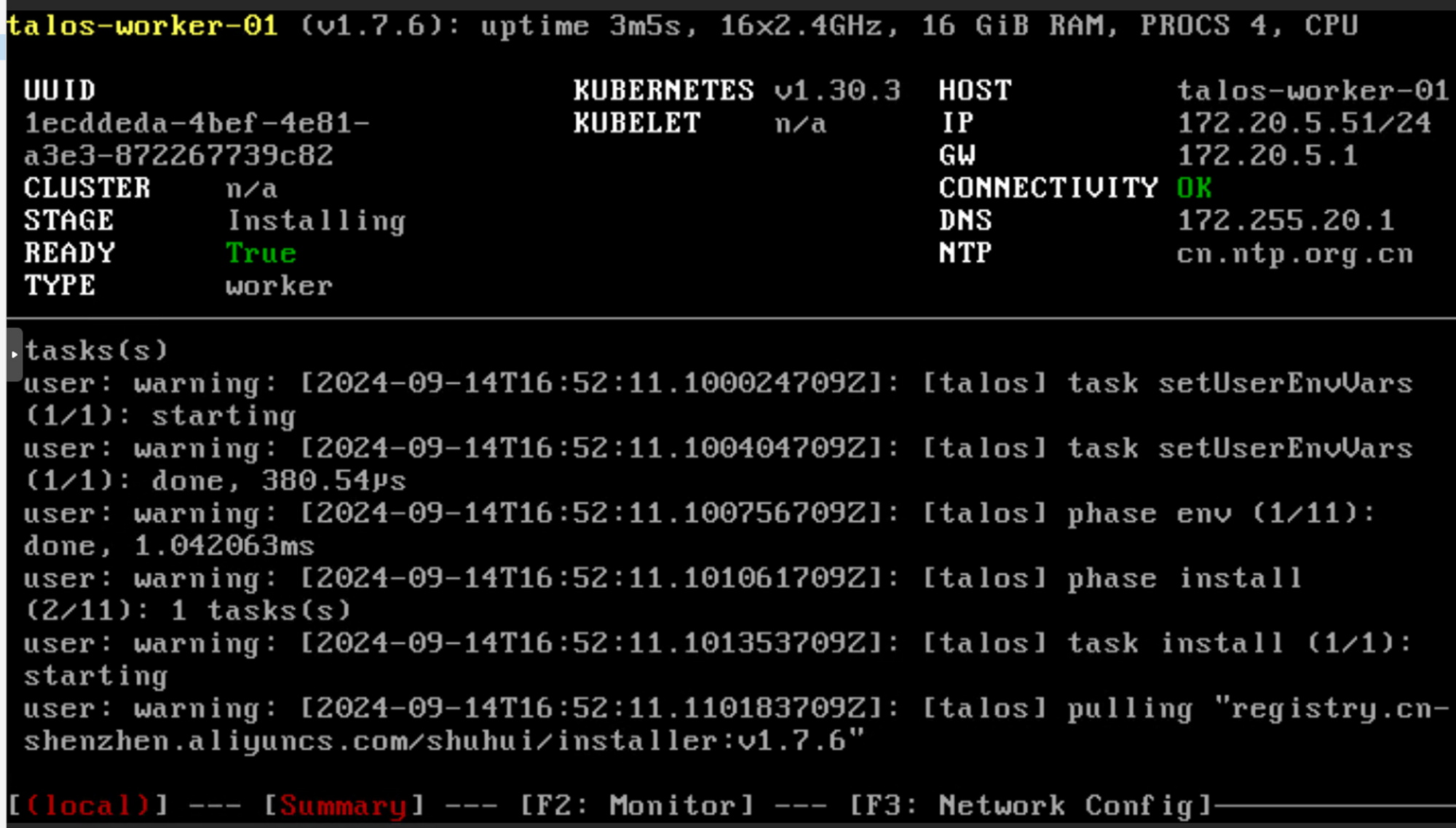

# talosctl apply-config --insecure --nodes $CONTROL_PLANE_IP --file controlplane.yaml4. 创建数据平面(worker)节点

# talosctl apply-config --insecure --nodes 172.20.5.51 --file worker.yaml

# talosctl apply-config --insecure --nodes 172.20.5.52 --file worker.yaml

5. 声明集群

export TALOSCONFIG="talosconfig"

talosctl config endpoint $CONTROL_PLANE_IP

talosctl config node $CONTROL_PLANE_IP6. 启动etcd

# talosctl bootstrap7. 生成kubeconfig

# talosctl kubeconfig .talosctl 常规运维

- 节点关机

# export TALOSCONFIG=/root/.talos/config

# talosctl shutdown -n 172.20.5.51

watching nodes: [172.20.5.51]

* 172.20.5.51: events check condition met

# talosctl shutdown -n 172.20.5.52

watching nodes: [172.20.5.52]

* 172.20.5.52: events check condition met

# talosctl shutdown -n 172.20.5.41

watching nodes: [172.20.5.41]

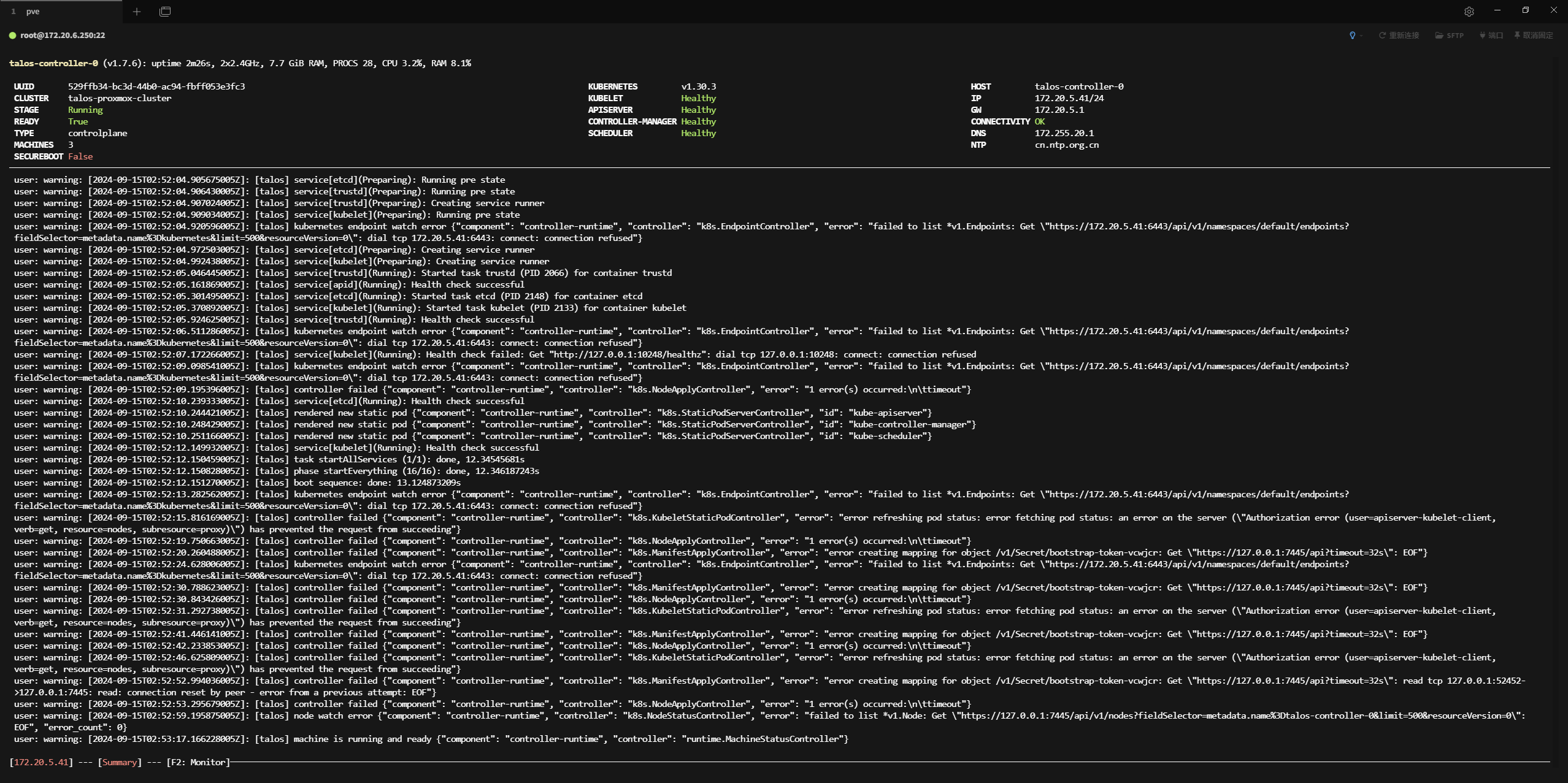

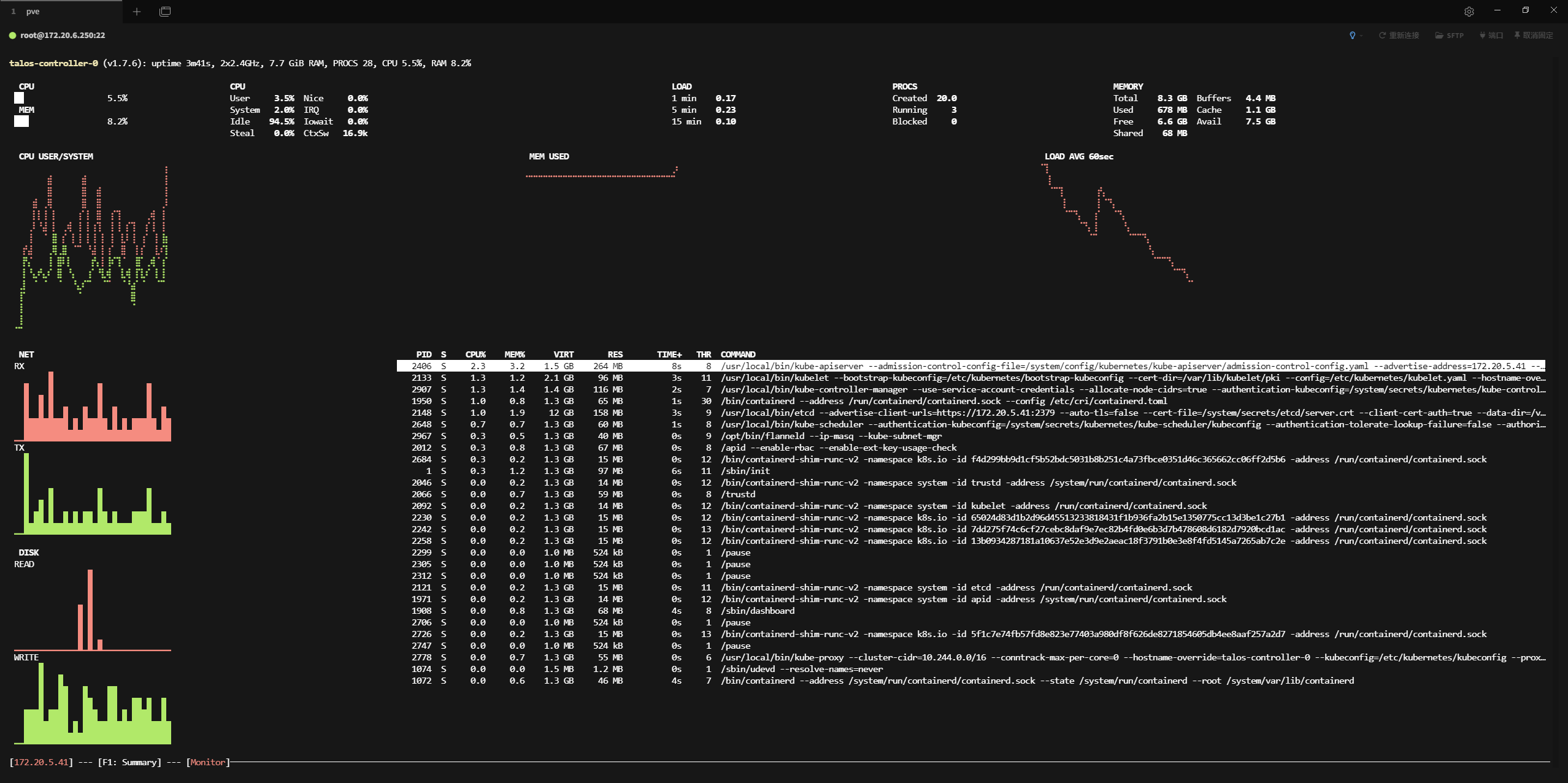

* 172.20.5.41: events check condition met- 集群状态可视化

# talosctl dashboard

- 节点挂载状态

# talosctl mount

NODE FILESYSTEM SIZE(GB) USED(GB) AVAILABLE(GB) PERCENT USED MOUNTED ON

172.20.5.41 devtmpfs 4.12 0.00 4.12 0.00% /dev

172.20.5.41 tmpfs 4.16 0.00 4.16 0.02% /run

172.20.5.41 tmpfs 4.16 0.00 4.16 0.01% /system

172.20.5.41 tmpfs 0.07 0.00 0.07 0.00% /tmp

172.20.5.41 /dev/loop0 0.07 0.07 0.00 100.00% /

172.20.5.41 tmpfs 4.16 0.00 4.16 0.00% /dev/shm

172.20.5.41 tmpfs 4.16 0.00 4.16 0.01% /etc/cri/conf.d/hosts

172.20.5.41 overlay 4.16 0.00 4.16 0.01% /usr/etc/udev

172.20.5.41 /dev/sda5 0.10 0.01 0.09 6.31% /system/state

172.20.5.41 /dev/sda6 52.36 2.29 50.07 4.37% /var

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /etc/cni

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /etc/kubernetes

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /usr/libexec/kubernetes

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /opt

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /run/containerd/io.containerd.runtime.v2.task/system/kubelet/rootfs

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /run/containerd/io.containerd.runtime.v2.task/system/etcd/rootfs

172.20.5.41 shm 0.07 0.00 0.07 0.00% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/65024d83d1b2d96d45513233818431f1b936fa2b15e1350775cc13d3be1c27b1/shm

172.20.5.41 shm 0.07 0.00 0.07 0.00% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/7dd275f74c6cf27cebc8daf9e7ec82b4fd0e6b3d7b478608d6182d7920bcd1ac/shm

172.20.5.41 shm 0.07 0.00 0.07 0.00% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/13b0934287181a10637e52e3d9e2aeac18f3791b0e3e8f4fd5145a7265ab7c2e/shm

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /run/containerd/io.containerd.runtime.v2.task/k8s.io/7dd275f74c6cf27cebc8daf9e7ec82b4fd0e6b3d7b478608d6182d7920bcd1ac/rootfs

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /run/containerd/io.containerd.runtime.v2.task/k8s.io/65024d83d1b2d96d45513233818431f1b936fa2b15e1350775cc13d3be1c27b1/rootfs

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /run/containerd/io.containerd.runtime.v2.task/k8s.io/13b0934287181a10637e52e3d9e2aeac18f3791b0e3e8f4fd5145a7265ab7c2e/rootfs

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /run/containerd/io.containerd.runtime.v2.task/k8s.io/97fa705598ad044d14253c31878d63f8dd83230581f54e6024217171dada84ea/rootfs

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /run/containerd/io.containerd.runtime.v2.task/k8s.io/6374674721ba627b3ba83bcd861e2d117f36b120acde343d84318d74f0635f18/rootfs

172.20.5.41 tmpfs 8.01 0.00 8.01 0.00% /var/lib/kubelet/pods/01936dca-a8b7-49b0-b66a-c42a9e4691d2/volumes/kubernetes.io~projected/kube-api-access-qs4t5

172.20.5.41 tmpfs 8.01 0.00 8.01 0.00% /var/lib/kubelet/pods/471e5208-4a93-42d1-9c43-e66e5dbb56c8/volumes/kubernetes.io~projected/kube-api-access-hffbn

172.20.5.41 shm 0.07 0.00 0.07 0.00% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/f4d299bb9d1cf5b52bdc5031b8b251c4a73fbce0351d46c365662cc06ff2d5b6/shm

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /run/containerd/io.containerd.runtime.v2.task/k8s.io/f4d299bb9d1cf5b52bdc5031b8b251c4a73fbce0351d46c365662cc06ff2d5b6/rootfs

172.20.5.41 shm 0.07 0.00 0.07 0.00% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/5f1c7e74fb57fd8e823e77403a980df8f626de8271854605db4ee8aaf257a2d7/shm

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /run/containerd/io.containerd.runtime.v2.task/k8s.io/5f1c7e74fb57fd8e823e77403a980df8f626de8271854605db4ee8aaf257a2d7/rootfs

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /run/containerd/io.containerd.runtime.v2.task/k8s.io/245c4baad8e199e0af8212a94491e3b59218b331d25c2f052c40a7f15726728a/rootfs

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /run/containerd/io.containerd.runtime.v2.task/k8s.io/e2a926fdd54175bcb8d96bc25e679af0e7dfae5efb48c1417518e231917dbb6a/rootfs

172.20.5.41 overlay 52.36 2.29 50.07 4.37% /run/containerd/io.containerd.runtime.v2.task/k8s.io/efa5b28fcb013e499fdf2aff8e3426dfcb218ee2779ea7effa916a9ea4d651c7/rootfs- 查看节点进程

# talosctl processes

NODE PID STATE THREADS CPU-TIME VIRTMEM RESMEM COMMAND

172.20.5.41 2406 S 8 9.81 1.5 GB 264 MB /usr/local/bin/kube-apiserver --admission-control-config-file=/system/config/kubernetes/kube-apiserver/admission-control-config.yaml --advertise-address=172.20.5.41 --allow-privileged=true --anonymous-auth=false --api-audiences=https://172.20.5.41:6443 --audit-log-maxage=30 --audit-log-maxbackup=10 --audit-log-maxsize=100 --audit-log-path=/var/log/audit/kube/kube-apiserver.log --audit-policy-file=/system/config/kubernetes/kube-apiserver/auditpolicy.yaml --authorization-mode=Node,RBAC --bind-address=0.0.0.0 --client-ca-file=/system/secrets/kubernetes/kube-apiserver/ca.crt --enable-admission-plugins=NodeRestriction --enable-bootstrap-token-auth=true --encryption-provider-config=/system/secrets/kubernetes/kube-apiserver/encryptionconfig.yaml --etcd-cafile=/system/secrets/kubernetes/kube-apiserver/etcd-client-ca.crt --etcd-certfile=/system/secrets/kubernetes/kube-apiserver/etcd-client.crt --etcd-keyfile=/system/secrets/kubernetes/kube-apiserver/etcd-client.key --etcd-servers=https://localhost:2379 --kubelet-client-certificate=/system/secrets/kubernetes/kube-apiserver/apiserver-kubelet-client.crt --kubelet-client-key=/system/secrets/kubernetes/kube-apiserver/apiserver-kubelet-client.key --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --profiling=false --proxy-client-cert-file=/system/secrets/kubernetes/kube-apiserver/front-proxy-client.crt --proxy-client-key-file=/system/secrets/kubernetes/kube-apiserver/front-proxy-client.key --requestheader-allowed-names=front-proxy-client --requestheader-client-ca-file=/system/secrets/kubernetes/kube-apiserver/aggregator-ca.crt --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-group-headers=X-Remote-Group --requestheader-username-headers=X-Remote-User --secure-port=6443 --service-account-issuer=https://172.20.5.41:6443 --service-account-key-file=/system/secrets/kubernetes/kube-apiserver/service-account.pub --service-account-signing-key-file=/system/secrets/kubernetes/kube-apiserver/service-account.key --service-cluster-ip-range=10.96.0.0/12 --tls-cert-file=/system/secrets/kubernetes/kube-apiserver/apiserver.crt --tls-cipher-suites=TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_128_GCM_SHA256 --tls-min-version=VersionTLS12 --tls-private-key-file=/system/secrets/kubernetes/kube-apiserver/apiserver.key

172.20.5.41 2148 S 9 4.09 12 GB 156 MB /usr/local/bin/etcd --advertise-client-urls=https://172.20.5.41:2379 --auto-tls=false --cert-file=/system/secrets/etcd/server.crt --client-cert-auth=true --data-dir=/var/lib/etcd --experimental-compact-hash-check-enabled=true --experimental-initial-corrupt-check=true --experimental-watch-progress-notify-interval=5s --key-file=/system/secrets/etcd/server.key --listen-client-urls=https://0.0.0.0:2379 --listen-peer-urls=https://0.0.0.0:2380 --name=talos-controller-0 --peer-auto-tls=false --peer-cert-file=/system/secrets/etcd/peer.crt --peer-client-cert-auth=true --peer-key-file=/system/secrets/etcd/peer.key --peer-trusted-ca-file=/system/secrets/etcd/ca.crt --trusted-ca-file=/system/secrets/etcd/ca.crt

172.20.5.41 2907 S 7 2.60 1.4 GB 117 MB /usr/local/bin/kube-controller-manager --use-service-account-credentials --allocate-node-cidrs=true --authentication-kubeconfig=/system/secrets/kubernetes/kube-controller-manager/kubeconfig --authorization-kubeconfig=/system/secrets/kubernetes/kube-controller-manager/kubeconfig --bind-address=127.0.0.1 --cluster-cidr=10.244.0.0/16 --cluster-signing-cert-file=/system/secrets/kubernetes/kube-controller-manager/ca.crt --cluster-signing-key-file=/system/secrets/kubernetes/kube-controller-manager/ca.key --configure-cloud-routes=false --controllers=*,tokencleaner --kubeconfig=/system/secrets/kubernetes/kube-controller-manager/kubeconfig --leader-elect=true --profiling=false --root-ca-file=/system/secrets/kubernetes/kube-controller-manager/ca.crt --service-account-private-key-file=/system/secrets/kubernetes/kube-controller-manager/service-account.key --service-cluster-ip-range=10.96.0.0/12 --tls-min-version=VersionTLS13

172.20.5.41 1 S 11 6.34 1.3 GB 97 MB /sbin/init

172.20.5.41 2133 S 11 3.72 2.1 GB 97 MB /usr/local/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubeconfig --cert-dir=/var/lib/kubelet/pki --config=/etc/kubernetes/kubelet.yaml --hostname-override=talos-controller-0 --kubeconfig=/etc/kubernetes/kubeconfig-kubelet --node-ip=172.20.5.41

172.20.5.41 1908 S 8 4.51 1.3 GB 68 MB /sbin/dashboard

172.20.5.41 2012 S 8 0.98 1.3 GB 67 MB /apid --enable-rbac --enable-ext-key-usage-check

172.20.5.41 1950 S 30 1.57 1.3 GB 65 MB /bin/containerd --address /run/containerd/containerd.sock --config /etc/cri/containerd.toml

172.20.5.41 2648 S 8 2.02 1.3 GB 60 MB /usr/local/bin/kube-scheduler --authentication-kubeconfig=/system/secrets/kubernetes/kube-scheduler/kubeconfig --authentication-tolerate-lookup-failure=false --authorization-kubeconfig=/system/secrets/kubernetes/kube-scheduler/kubeconfig --bind-address=127.0.0.1 --config=/system/config/kubernetes/kube-scheduler/scheduler-config.yaml --leader-elect=true --profiling=false --tls-min-version=VersionTLS13

172.20.5.41 2066 S 8 0.13 1.3 GB 58 MB /trustd

172.20.5.41 2778 S 6 0.20 1.3 GB 55 MB /usr/local/bin/kube-proxy --cluster-cidr=10.244.0.0/16 --conntrack-max-per-core=0 --hostname-override=talos-controller-0 --kubeconfig=/etc/kubernetes/kubeconfig --proxy-mode=iptables

172.20.5.41 1072 S 7 4.76 1.3 GB 46 MB /bin/containerd --address /system/run/containerd/containerd.sock --state /system/run/containerd --root /system/var/lib/containerd

172.20.5.41 2967 S 9 0.37 1.3 GB 41 MB /opt/bin/flanneld --ip-masq --kube-subnet-mgr

172.20.5.41 2242 S 13 0.09 1.3 GB 16 MB /bin/containerd-shim-runc-v2 -namespace k8s.io -id 7dd275f74c6cf27cebc8daf9e7ec82b4fd0e6b3d7b478608d6182d7920bcd1ac -address /run/containerd/containerd.sock

172.20.5.41 2230 S 12 0.08 1.3 GB 15 MB /bin/containerd-shim-runc-v2 -namespace k8s.io -id 65024d83d1b2d96d45513233818431f1b936fa2b15e1350775cc13d3be1c27b1 -address /run/containerd/containerd.sock

172.20.5.41 2726 S 13 0.10 1.3 GB 15 MB /bin/containerd-shim-runc-v2 -namespace k8s.io -id 5f1c7e74fb57fd8e823e77403a980df8f626de8271854605db4ee8aaf257a2d7 -address /run/containerd/containerd.sock

172.20.5.41 2684 S 12 0.07 1.3 GB 15 MB /bin/containerd-shim-runc-v2 -namespace k8s.io -id f4d299bb9d1cf5b52bdc5031b8b251c4a73fbce0351d46c365662cc06ff2d5b6 -address /run/containerd/containerd.sock

172.20.5.41 2258 S 12 0.06 1.3 GB 15 MB /bin/containerd-shim-runc-v2 -namespace k8s.io -id 13b0934287181a10637e52e3d9e2aeac18f3791b0e3e8f4fd5145a7265ab7c2e -address /run/containerd/containerd.sock

172.20.5.41 2046 S 12 0.03 1.3 GB 15 MB /bin/containerd-shim-runc-v2 -namespace system -id trustd -address /system/run/containerd/containerd.sock

172.20.5.41 2121 S 11 0.04 1.3 GB 15 MB /bin/containerd-shim-runc-v2 -namespace system -id etcd -address /run/containerd/containerd.sock

172.20.5.41 2092 S 12 0.06 1.3 GB 14 MB /bin/containerd-shim-runc-v2 -namespace system -id kubelet -address /run/containerd/containerd.sock

172.20.5.41 1971 S 12 0.06 1.3 GB 14 MB /bin/containerd-shim-runc-v2 -namespace system -id apid -address /system/run/containerd/containerd.sock

172.20.5.41 1074 S 1 0.22 1.5 MB 1.2 MB /sbin/udevd --resolve-names=never- 查看系统内核日志

s# talosctl dmesg | head -n 20

172.20.5.41: kern: notice: [2024-09-15T02:51:41.506860175Z]: Linux version 6.6.43-talos (@buildkitsandbox) (gcc (GCC) 13.2.0, GNU ld (GNU Binutils) 2.42) #1 SMP Mon Aug 5 13:28:56 UTC 2024

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: Command line: BOOT_IMAGE=/A/vmlinuz talos.platform=metal talos.config=none console=ttyS0 console=tty0 init_on_alloc=1 slab_nomerge pti=on consoleblank=0 nvme_core.io_timeout=4294967295 printk.devkmsg=on ima_template=ima-ng ima_appraise=fix ima_hash=sha512

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: BIOS-provided physical RAM map:

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: BIOS-e820: [mem 0x0000000000000000-0x000000000009fbff] usable

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: BIOS-e820: [mem 0x000000000009fc00-0x000000000009ffff] reserved

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: BIOS-e820: [mem 0x00000000000f0000-0x00000000000fffff] reserved

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: BIOS-e820: [mem 0x0000000000100000-0x00000000bffd9fff] usable

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: BIOS-e820: [mem 0x00000000bffda000-0x00000000bfffffff] reserved

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: BIOS-e820: [mem 0x00000000feffc000-0x00000000feffffff] reserved

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: BIOS-e820: [mem 0x00000000fffc0000-0x00000000ffffffff] reserved

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: BIOS-e820: [mem 0x0000000100000000-0x000000023fffffff] usable

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: NX (Execute Disable) protection: active

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: APIC: Static calls initialized

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: SMBIOS 3.0.0 present.

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: DMI: QEMU Standard PC (i440FX + PIIX, 1996), BIOS rel-1.16.2-0-gea1b7a073390-prebuilt.qemu.org 04/01/2014

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: Hypervisor detected: KVM

172.20.5.41: kern: info: [2024-09-15T02:51:41.506860175Z]: kvm-clock: Using msrs 4b564d01 and 4b564d00

172.20.5.41: kern: info: [2024-09-15T02:51:41.506861175Z]: kvm-clock: using sched offset of 9872295078 cycles

172.20.5.41: kern: info: [2024-09-15T02:51:41.506863175Z]: clocksource: kvm-clock: mask: 0xffffffffffffffff max_cycles: 0x1cd42e4dffb, max_idle_ns: 881590591483 ns

172.20.5.41: kern: info: [2024-09-15T02:51:41.506866175Z]: tsc: Detected 2399.998 MHz processor须知事项

- talos 系统节点是不支持调整时区,将始终以UTC运行;

- 关于证书,初始化默认生成10年周期的CA,kubeconfig等客户端证书有效期为1年,可以直接通过talosctl kubeconfig来续签;

- Talos完全由API驱动,所以它始终不具备SHELL与SSH,这需要一定思维转换周期,不过至少在安全维度来说,是件好事。