Elasticsearch + Kibana + Fluent-bit日志采集方案

序

在本文中,将简单说明如何在Kubernetes环境下部署 Elasticsearch服务、Kibana服务、Fluent Bit服务,通过Kibana服务进行可视化预览, 同时我们将以Fluent-Bit 进行日志收集。

本文所部署服务为典型的EFK日志系统,至少是这些组件。如果部署了一整套EFK系统,从中选择某个Pod的日志也需要进一步进行筛选过滤才能得到需要的日志。有时候我们不需要收集整个集群所产生的日志内容,而是只关心某个命名空间、某个模块所产生的日志,这时候就需要更为灵活的日志代理部署模式了,我们通常称之为“Sidecar”模式。基于伴生模式的日志可以很容易满足需求。

在伴生模式容器模式下,Pod内部通常有两个应用容器,一个为主业务容器,另一个为日志代理功能的伴生容器,两个容器共享一个数据卷。

所以在容器编排集群中有两种日志采集方案:

方案1:Sidecar 伴生模式

方案2:Daemonset模式

部署清单

- Elasticsearch:

6.8.16 - Kibana:

6.8.16 - Fluent-bit:

1.7

Elasticsearch 部署

- configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: es-config

data:

elasticsearch.yml: |

cluster.name: contaner-log

network.host: 0.0.0.0

http.port: 9200

transport.host: 127.0.0.1

transport.tcp.port: 9300

bootstrap.memory_lock: false

xpack.security.enabled: true

xpack.monitoring.enabled: false

xpack.graph.enabled: false

xpack.watcher.enabled: false

xpack.ml.enabled: false

indices.query.bool.max_clause_count: 10240

ES_JAVA_OPTS: -Xms2046m -Xmx4096m

留意

xpack.security.enabled: true配置,我们需要启用xpack插件

- service.yaml

apiVersion: v1

kind: Service

metadata:

name: elasticsearch-svc

spec:

selector:

app: elasticsearch

env: prod

ports:

- name: http

protocol: TCP

port: 9200

targetPort: 9200

type: ClusterIP

#clusterIP: None

sessionAffinity: None

- deployment.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: elasticsearch

spec:

serviceName: elasticsearch

selector:

matchLabels:

app: elasticsearch

env: prod

replicas: 1

template:

metadata:

labels:

app: elasticsearch

env: prod

spec:

nodeSelector:

app: logging-platform

securityContext:

fsGroup: 1000

initContainers:

- name: fix-permissions

image: busybox:latest

imagePullPolicy: IfNotPresent

securityContext:

privileged: true

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

volumeMounts:

- name: es-data

mountPath: /usr/share/elasticsearch/data

- name: init-sysctl

image: busybox

imagePullPolicy: IfNotPresent

securityContext:

privileged: true

command: ["sysctl", "-w", "vm.max_map_count=262144"]

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:6.8.16

resources:

requests:

memory: 2Gi

env:

- name: discovery.type

value: single-node

- name: ES_JAVA_OPTS

valueFrom:

configMapKeyRef:

name: es-config

key: ES_JAVA_OPTS

#readinessProbe:

# httpGet:

# scheme: HTTP

# path: /_cluster/health?local=true

# port: 9200

# initialDelaySeconds: 5

securityContext:

privileged: true

runAsUser: 1000

capabilities:

add:

- IPC_LOCK

- SYS_RESOURCE

volumeMounts:

- name: volume-localtime

mountPath: /etc/localtime

- name: es-data

mountPath: /usr/share/elasticsearch/data

- name: es-config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

subPath: elasticsearch.yml

volumes:

- name: volume-localtime

hostPath:

path: /etc/localtime

- name: es-data

hostPath:

path: /opt/haid/dbs/elasticsearch

- name: es-config

configMap:

name: es-config

items:

- key: elasticsearch.yml

path: elasticsearch.yml

Tips: 由于开启了X-pack插件,启用探针时需要添加验证

创建KUBE资源

kubectl apply -f .

应用正常初始化后,使用以下命令来创建用户名与密码

$ kubectl exec -it elasticsearch-0 -- bin/elasticsearch-setup-passwords auto -b

<忽略其它用户名密码>

Changed password for user elastic

PASSWORD elastic = password # 管理员账号

Kibana 部署

- configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: kibana-config

labels:

component: kibana

data:

elasticsearch_username: kibana # 使用上面创建的kibana凭证

elasticsearch_password: xxxxxxxxx

建议使用secret资源

- service.yaml

apiVersion: v1

kind: Service

metadata:

name: kibana-svc

labels:

app: kibana

env: prod

spec:

selector:

app: kibana

env: prod

ports:

- name: http

port: 80

targetPort: http

- demployment.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: kibana

spec:

serviceName: kibana

selector:

matchLabels:

app: kibana

env: prod

replicas: 1

template:

metadata:

labels:

app: kibana

env: prod

spec:

terminationGracePeriodSeconds: 30

nodeSelector:

app: logging-platform

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:6.8.16

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch-svc:9200

- name: XPACK_SECURITY_ENABLED

value: "true"

- name: ELASTICSEARCH_USERNAME

valueFrom:

configMapKeyRef:

name: kibana-config

key: elasticsearch_username

- name: ELASTICSEARCH_PASSWORD

valueFrom:

configMapKeyRef:

name: kibana-config

key: elasticsearch_password

envFrom:

- configMapRef:

name: kibana-config

ports:

- containerPort: 5601

name: http

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

留意我们需要启用xpack插件

Ingress

将Kibana服务通过Ingress暴露出来,供外部进行访问。

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kibana-ingress

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/proxy-body-size: 128m

nginx.ingress.kubernetes.io/whitelist-source-range: "白名单IP"

nginx.ingress.kubernetes.io/proxy-buffering: "on"

nginx.ingress.kubernetes.io/proxy-buffer-size: "8k"

spec:

rules:

- host: c-log.vqiu.cn

http:

paths:

- path: /

backend:

serviceName: kibana-svc

servicePort: 80

使用Fluent-bit收集容器日志

为什么使用Fluent-bit,Fluent-bit比Fluentd更加轻量化--占用资源更低,除插件少些(毕竟发展要晚些)。

Nginx

使用configmap配置fluent-bit

apiVersion: v1

kind: ConfigMap

metadata:

name: fluentbit-config

labels:

app.kubernetes.io/name: fluentbit

data:

fluent-bit.conf: |-

[SERVICE]

Parsers_File parsers.conf

Daemon Off

Log_Level info

HTTP_Server off

HTTP_Listen 0.0.0.0

HTTP_Port 24224

[INPUT]

Name tail

Tag nginx-access.*

Path /var/log/nginx/*.log

Mem_Buf_Limit 5MB

DB /var/log/flt_logs.db

Refresh_Interval 5

Ignore_Older 10s

Rotate_Wait 5

[FILTER]

Name parser

Match *

Parser nginx

Key_name aux

[FILTER]

Name record_modifier

Match *

Key_name message

Record hostname ${HOSTNAME}

Record namespace mdp-system

Record environment prod

[OUTPUT]

Name es

Match *

Host elasticsearch-svc

Port 9200

HTTP_User User

HTTP_Passwd Password

Logstash_Format On

Retry_Limit False

Time_Key @timestamp

Logstash_Prefix nginx-access

parsers.conf: |

[PARSER]

Name nginx

Format regex

Regex ^(?<remote>[^ ]*) (?<host>[^ ]*) (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^\"]*?)(?: +\S*)?)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")? \"-\"$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

Tips: 如果命名空间不在同一个, 需要留意 Host地址。

Nginx部署YAML资源

apiVersion: v1

kind: Service

metadata:

name: nginx-log-sidecar

labels:

app: nginx

spec:

ports:

- name: http

port: 80

targetPort: 80

selector:

app: nginx

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-log-sidecar

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: Always

volumeMounts:

- mountPath: /var/log/nginx

name: log-volume

resources:

requests:

cpu: 10m

memory: 50Mi

limits:

cpu: 50m

memory: 100Mi

- name: fluentbit-logger

image: fluent/fluent-bit:1.7

imagePullPolicy: IfNotPresent

resources:

requests:

cpu: 20m

memory: 100Mi

limits:

cpu: 100m

memory: 200Mi

volumeMounts:

- mountPath: /fluent-bit/etc

name: config

- mountPath: /var/log/nginx

name: log-volume

volumes:

- name: config

configMap:

name: fluentbit-config

- name: log-volume

emptyDir: {}

某JAVA后端应用

后端打印的日志格式如下:

[2021-07-03 12:01:01] [INFO] [springfox.documentation.spring.web.readers.operation.CachingOperationNameGenerator:40] Generating unique operation named: auditUsingGET_4

[2021-07-03 12:01:01] [INFO] [springfox.documentation.spring.web.readers.operation.CachingOperationNameGenerator:40] Generating unique operation named: createUsingPOST_136

[2021-07-03 12:01:01] [INFO] [springfox.documentation.spring.web.readers.operation.CachingOperationNameGenerator:40] Generating unique operation named: deleteUsingDELETE_136

[2021-07-03 12:01:01] [INFO] [springfox.documentation.spring.web.readers.operation.CachingOperationNameGenerator:40] Generating unique operation named: getUsingGET_136

[2021-07-03 12:01:01] [INFO] [springfox.documentation.spring.web.readers.operation.CachingOperationNameGenerator:40] Generating unique operation named: listUsingGET_144

[2021-07-03 12:01:01] [INFO] [springfox.documentation.spring.web.readers.operation.CachingOperationNameGenerator:40] Generating unique operation named: updateUsingPUT_136

[2021-07-03 12:01:01] [INFO] [springfox.documentation.spring.web.readers.operation.CachingOperationNameGenerator:40] Generating unique operation named: loginUsingPOST_1

[2021-07-03 12:01:01] [INFO] [springfox.documentation.spring.web.readers.operation.CachingOperationNameGenerator:40] Generating unique operation named: getMatterInfoUsingPOST_1

[2021-07-03 12:01:01] [INFO] [springfox.documentation.spring.web.readers.operation.CachingOperationNameGenerator:40] Generating unique operation named: getOrganizationInfoUsingPOST_1

[2021-07-03 12:01:01] [INFO] [springfox.documentation.spring.web.readers.operation.CachingOperationNameGenerator:40] Generating unique operation named: getStorageInfoUsingPOST_2

[2021-07-03 12:01:01] [INFO] [springfox.documentation.spring.web.readers.operation.CachingOperationNameGenerator:40] Generating unique operation named: getStorageInfoUsingPOST_3

[2021-07-03 12:01:01] [INFO] [springfox.documentation.spring.web.readers.operation.CachingOperationNameGenerator:40] Generating unique operation named: createUsingPOST_137

根据上面的日志格式,处理的正则语句如下:

^\[(?<time>\d{4}-\d{1,2}-\d{1,2} \d{1,2}:\d{1,2}:\d{1,2})\] \[(?<level>(INFO|ERROR|WARN))\] \[(?<thread>.*)\] (?<message>[\s\S].*)

可移步 https://rubular.com/r/X7BH0M4Ivm 进行测试

部署YAML资源

- configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: webapp-fb-config

data:

fluent-bit.conf: |-

[SERVICE]

Flush 1

Log_Level info

Daemon off

Parsers_File parsers.conf

HTTP_Server Off

HTTP_Listen 0.0.0.0

HTTP_Port 2020

@INCLUDE input-java.conf

#@INCLUDE filter-stdout.conf

@INCLUDE output-stdout.conf

input-java.conf: |-

[INPUT]

Name tail

Tag javaLog

Path /tmp/log/*

Mem_Buf_Limit 5MB

Buffer_Chunk_Size 256KB

Buffer_Max_Size 512KB

Multiline On

Refresh_Interval 10

#Skip_Long_Lines On

Parser_Firstline java_multiline

DB /var/log/flb_kube.db

filter-stdout.conf: |-

[FILTER]

Name record_modifier

Match javaLog

Key_name log

Record hostname ${HOSTNAME}

Record environment dev

output-stdout.conf: |-

[OUTPUT]

Name es

Match *

Host c-log-es-slb.xx.local

Port 9200

HTTP_User User

HTTP_Passwd PASSWORD

Logstash_Format On

Retry_Limit False

Time_Key @timestamp

Logstash_Prefix javaLog

parsers.conf: |-

[PARSER]

Name java_multiline

Format regex

Time_Key time

Time_Key Time_Format %Y-%m-%d %H:%M:%S

Regex /^\[(?<time>\d{4}-\d{1,2}-\d{1,2} \d{1,2}:\d{1,2}:\d{1,2})\] \[(?<level>(INFO|ERROR|WARN))\] \[(?<thread>.*)\] (?<message>[\s\S].*)/

- deployment.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: javeApp-deploy

annotations:

app.kubernetes.io/developer: "hecx02"

app.kubernetes.io/pm: "null"

app.kubernetes.io/ops: "qiush01"

app.kubernetes.io/repository: "https://gitlab.xx.com/Project/javeApp.git"

app.kubernetes.io/managed-by: jenkins

spec:

replicas: 1

selector:

matchLabels:

app: javeApp

env: dev

template:

metadata:

labels:

app: javeApp

env: dev

spec:

terminationGracePeriodSeconds: 20

imagePullSecrets:

- name: haid-registry-secret

nodeSelector:

app: javeApp

containers:

- name: javeApp-fb-sidecar

image: fluent-bit:1.7

imagePullPolicy: IfNotPresent

name: fluentbit-sidecar

resources:

limits:

cpu: 200m

memory: 800Mi

requests:

cpu: 20m

memory: 100Mi

volumeMounts:

- name: fluentbit-config

mountPath: /fluent-bit/etc

- name: log-storage

mountPath: /tmp/log

- name: webapp

image: registry.xxx.com/project/javeApp:#IMAGE_TAG#

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 8080

protocol: TCP

resources:

requests:

cpu: 800m

memory: "2Gi"

limits:

cpu: 2

memory: "6Gi"

livenessProbe:

initialDelaySeconds: 120

periodSeconds: 10

timeoutSeconds: 5

httpGet:

path: /

port: 8080

scheme: HTTP

readinessProbe:

initialDelaySeconds: 120

periodSeconds: 10

timeoutSeconds: 5

httpGet:

path: /

port: 8080

scheme: HTTP

volumeMounts:

- name: config

mountPath: /usr/local/tomcat/webapps/ROOT/WEB-INF/classes/dbconfig.properties

subPath: dbconfig.properties

- name: log-storage

mountPath: /usr/local/logs

volumes:

- name: config

configMap:

name: javeApp-config

- name: fluentbit-config

configMap:

name: javeApp-fb-config

- name: log-storage

emptyDir: {}

应用预览

Kibana登陆截图

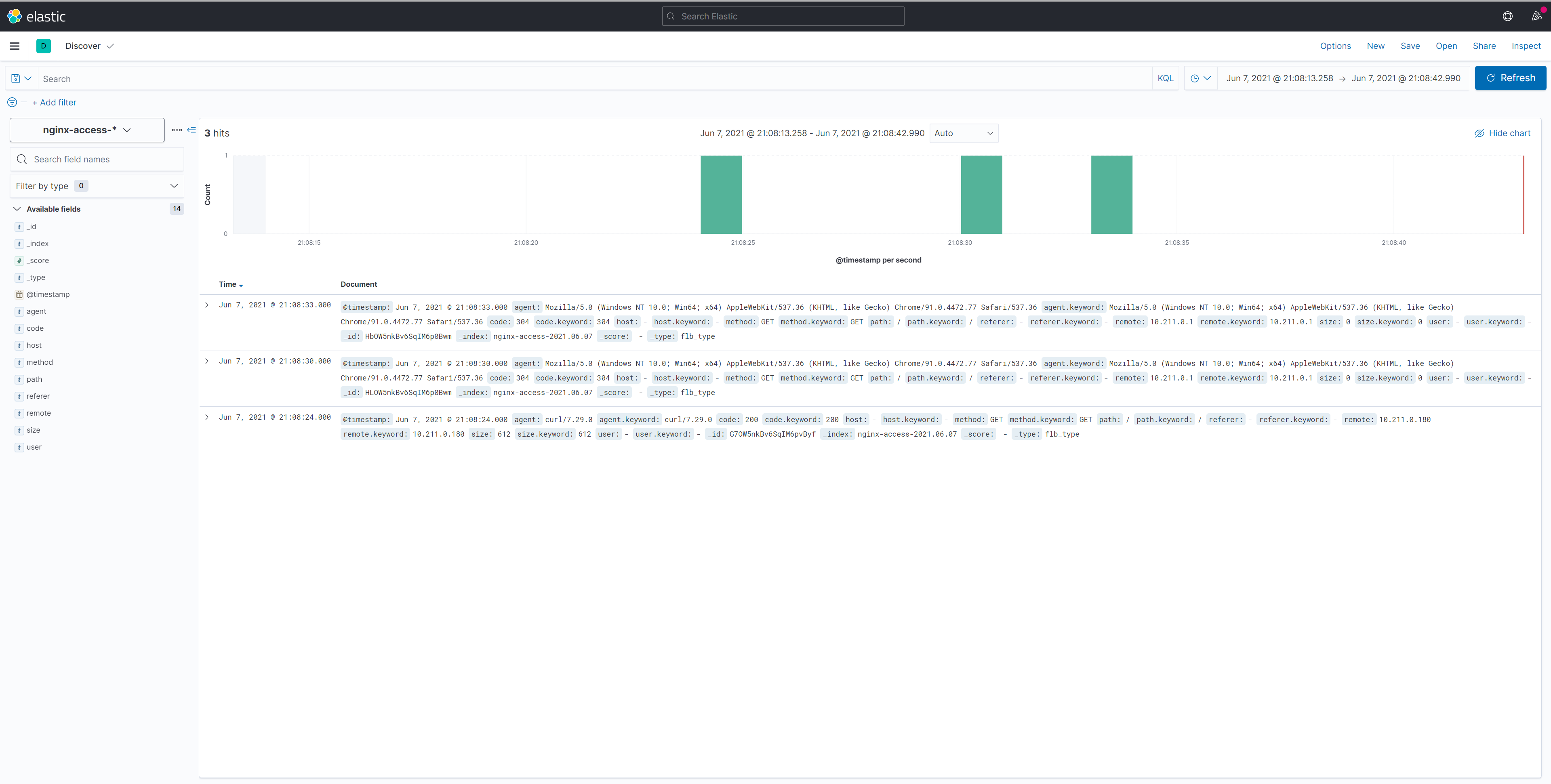

Nginx日志截图

常用故障

1、lines are too long. Skipping file

[xxxx/xx/xx 01:07:03] [error] [input:tail:tail.0] file=/usr/local/xx/log.txt requires a larger buffer size, lines are too long. Skipping file.

[xxxx/xx/xx 01:08:03] [error] [input:tail:tail.0] file=/usr/local/xx/log_.txt requires a larger buffer size, lines are too long. xx file.

[xxxx/xx/xx 01:09:03] [error] [input:tail:tail.0] file=/usr/local/xx/log_.txt requires a larger buffer size, lines are too long. Skipping file.

处理:

添加Buffer_Chunk_Size和Buffer_Chunk_Size,Buffer_Chunk_Size默认是32Kb,如果一行数据的长度大于这个值,可能会出现如上错误,其中Buffer_Max_Size默认情况下跟Buffer_Chunk_Size保持一致。

[INPUT]

Name tail

Mem_Buf_Limit 5MB

Buffer_Chunk_Size 256KB

Buffer_Max_Size 512KB

资料引用

- [1] https://www.deepnetwork.com/blog/2020/03/13/password-protected-efk-stack-on-k8s.html

- [2] https://blogs.halodoc.io/production-grade-eks-logging-with-efk-via-sidecar/

- [3] https://www.studytonight.com/post/setup-fluent-bit-with-elasticsearch-authentication-enabled-in-kubernetes

- [4] https://github.com/thisisabhishek/efk-with-xpack-security